Why ChatGPT Fails to Answer some Medical Questions

Decoding AI in Medical Reasoning: The Curious Case of ChatGPT's USMLE Performance

The Unexpected Prodigy

In the world of artificial intelligence, the recent buzz has been around ChatGPT, a program that has astonishingly passed the USMLE (United States Medical Licensing Exam) with scores progressively increasing from 65% to a remarkable 82%. The excitement was palpable, as the implications of an AI program capable of answering medical questions correctly were immense. But does this mean ChatGPT is inching closer to being a doctor? It raises intriguing questions about ChatGPT's ability to process and respond to medical queries.

The Fallacy of Exam Performance

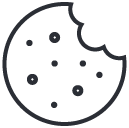

The first misconception we need to address is that exam performance equates to professional competence. We've all known those students who excel at exams but may not necessarily make the best physicians. They've mastered the art of identifying patterns and answering questions correctly, but does this mean they truly understand the subject matter? Similarly, ChatGPT, despite its impressive performance, lacks internal thinking or awareness. It's merely predicting the most probable next word, not necessarily understanding the knowledge or the reason behind the question.

The Probabilistic Puzzle

The intriguing question that arises is why, despite having access to a vast body of medical information and being trained on 170 trillion parameters, does ChatGPT still get some questions wrong? The USMLE questions are carefully crafted to test if a student can determine the most probable answer based on the information provided. If a student, with limited access to medical knowledge, can figure out the correct answer, why does ChatGPT, with its extensive knowledge, fail at times?

The Mystery of Misleading Information

Could there be flaws in the reasoning or the information provided? Could the information be intentionally misleading, altering the probability and leading students (and ChatGPT) astray? If all students were consistently choosing the same incorrect answer, it would be clear that the question was flawed. But if different students are picking different answers, could it be that the question is being interpreted differently based on individual thinking styles or perspectives?

The Enigma of AI Errors

When ChatGPT makes a mistake, we must ask: is it consistently making the same mistake, or are its errors random? As a probabilistic model, it should theoretically provide the same answer each time a question is posed. If it consistently chooses the same incorrect answer, we could potentially reason with it and understand why it's making that particular mistake. But what if ChatGPT keeps picking different options every time? What is happening in the "mind" of ChatGPT?

Cracking the Code of Medical Reasoning

Understanding the reasoning process of ChatGPT could potentially help us crack the code of medical reasoning in students. It could lead us down the path of understanding how doctors think, and in turn, revolutionize medical education and practice

Check out

our other projects

- Date

- August 21, 2023

Our goal is to support and advise innovative healthcare startups that significantly improve access to healthcare, with emphasis on emerging countries.- Date

- January 13, 2023